AI, Democracy, and the Human Future

Just a few days ago, officials in Saudi Arabia granted citizenship to a robot. One might laugh this off as a PR stunt designed to showcase recent work on AI, but this raises serious issues that will affect the whole future of our species.

When we look at this event in a larger context, it serves as an urgent reminder that we need to establish a framework for regulating AI if we want to maintain prospects for a livable human future.

It’s easy – at least in the Western world – to look at human society and human history and say: I’m going to bet on democracy, capitalism, science, and technology. These have served us well and worked well together – giving great freedom to the individual, increasing life expectancy, and improving the general standard of living.

But there’s a question: Is there any assurance that this “D, C, S & T system” will always work well? Can this system handle all the unintended consequences of the new technologies it creates?

We’ve already seen ways in which the system can falter and fail — how the burning of fossil fuels leads to global warming, how new technology used in fishing can deplete vital seed stocks, how development of the computer and the Internet has led to cybercrime and cyberwarfare and new ways of meddling in the electoral process. And new developments in AI now pose an even deeper risk.

I’ve eagerly followed work in this field since the mid-1980s when I started thinking about how AI could be relevant to problems of protein design. And – as a scientist – it’s exciting to see all the recent advances, reading about the increases in computer power and new coding methods that have allowed computers to beat humans at checkers, then chess, at the quiz show Jeopardy, and most recently, the game Go.

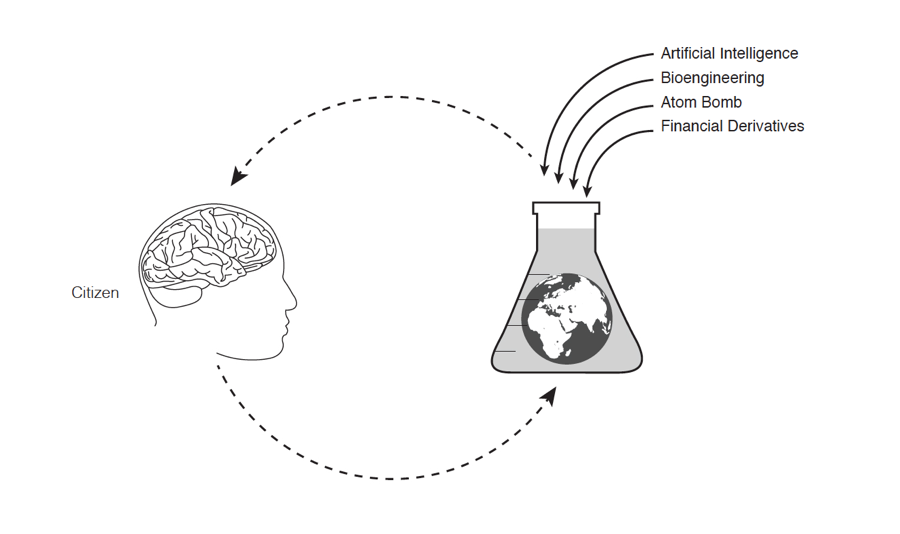

But I’m concerned about the implications of AI for society, and – more broadly – I’m concerned because it’s hard to foresee or to measure or to address the side effects and the cross terms arising from all these recent advances in science and technology.

We tend to just add new technology, mix it with everything else on the planet, and wait to see what happens.

As I consider potential effects of AI, I’ll skip all the Pollyanna scenarios in which AI is only used for the general human good; and I’ll skip all the dark scenarios in which AI leads to dangerous new modes of cybercrime, warfare, and financial fraud. I’ll just talk about the middle path and consider some relatively straightforward extrapolations from what’s already happening.

To be clear: I try to look out a few decades ahead, and everything below is based on the assumption that technology will continue to advance at a rapid rate. It’s hard to pick particular winners and losers – as one needs to do when investing as a venture capitalist. But there’s so much effort, so many billions of dollars invested, so many new approaches being tested that – as Ray Kurzweil has emphasized for years – rapid progress seems assured.

The first and perhaps most obvious social problem that arises with advanced computing technology comes from the way in which so many workers lose their jobs (as we already see with factory workers, office workers, and the retail industry). There’s no good way to predict the precise rate and extent of job loss, but this will certainly be disruptive in a “knowledge economy.” Money and power will accumulate in the hands of a technological elite who have the most computers and the best software. Unemployment and underemployment will be devastating for others, and may disrupt the very fabric of the social system. There’s a potential for increased use of drugs, increased crime, and increased risk that some demagogue will appear who promises to fix all our problems.

These concerns have led to some initial experiments – as with those by Sam Altman – to see how it might work to hand out money and thus help ensure that everyone has some “living wage.” But there’s nothing in the tax structure in the U.S., in the mood of the president and the Congress, or in the current financial stability of the country (with a cumulative federal debt now over $20 trillion) that would suggest the U.S. is ready to offer help at this level.

And there’s another – perhaps even scarier problem for democracy – that lies just behind this first shock wave of unemployment: The whole purpose of an educational system gets undermined if there’s no meaningful prospect for a job after decades of study. We may soon wonder: Why bother funding a system of public education? Why study hard? How could citizens – dependent on handouts – ever save the money needed for college, and why would anyone ever bother with the expense?

Thus, with little need for an educated (human) workforce, one simultaneously undermines and eliminates the kind of educational system that has produced the informed citizenry that can elect wise leaders and help deal with the challenges of the modern age. We thus risk a kind of multilevel disruption of society: We risk undermining the very mechanisms of democratic governance that might (otherwise) offer some hope of sorting out challenges inherent in this first tidal wave of disruptions.

Some of these risks will be hard to handle, but we need to try. We need to do the best we can to protect our society against these dangers – at the very least, working to maintain a system of governance that can help us deal with all the other challenges.

I’m open to discussion on details, but I’ve studied human thought long enough to know that we’ll need to work at several different levels of detail if we want a plan with some overall coherence. If we want to preserve prospects for a livable human future, we’ll need a few guiding principles:

#1) We’ll need to insist that all robots – all autonomous computational agents that we’ll soon find spread out on the streets, in the skies, and on the seas – have some kind of “dog tag” identifying their human owner, and we need to insist that the owner take responsibility for any damage caused by the robot. (Without this, society just bears the cost while the manufacturer keeps the profit. This is patently unfair to those who will not benefit from the new revolution.)

#2) We must prohibit the development of human-machine hybrids and of radically enhanced human beings, and we must not grant citizenship to robots and avatars. I don’t say this because of any moral qualms – which might be entirely appropriate as well! – I say this because democracy cannot survive amidst a caste system in which some “enhanced” people are inherently “better” than others. It’s hard enough to achieve consensus in our current multicultural society (with genome sequences differing by some small fraction of one percent). We cannot risk the further levels of disruption inherent in a “multi-species democracy” – with members constructed out of elements from different parts of the periodic table, working at radically different processing speeds, and communicating via different wavelengths of the electromagnetic spectrum.

Change at this level would disrupt all the belief systems underlying our social/legal system: Our laws and our democracy are dependent on shared beliefs – ideas about what it means to be human, what it means to commit a crime, how punishment should be meted out, and how rehabilitation might be achieved. These, in turn, are based on our shared history and on a shared set of human needs and desires, on a shared set of physical principles underlying the operation of the human body and mind and brain.

Of course, legal standards have evolved since the time of the Code of Hammurabi back in ancient Mesopotamia. But there’s a rate of change in these normative, cultural patterns of thought that’s radically different than anything involved in the development of this new technology. Society has no simple “update now” button (as with an Excel spreadsheet) that would let us recalibrate everything in the legal system, update and resynchronize every way in which every citizen thinks about the meaning of “being human”. We’ll have enough trouble understanding how people can handle this new technology without simultaneously introducing new, hybrid, transhuman entities.

In short: We’ll need some broader legal frame that will help keep us grounded in existing traditions. We’ll need some “pole star” to guide us as we work to address all the other challenges of the modern age. We’ll need to remember what it means to be human, to live in a community that works together so as to help maintain prospects for a livable human future. We cannot afford to be pressured by the high-tech industry when the entire future of our species now lies at stake. It would be silly to listen to complaints to the effect that “this will slow us down too much” when the very speed of the change is one aspect that makes it so hard for society to respond in an effective way.

This post was originally published at carlpabo.com.

Comments are closed.